-

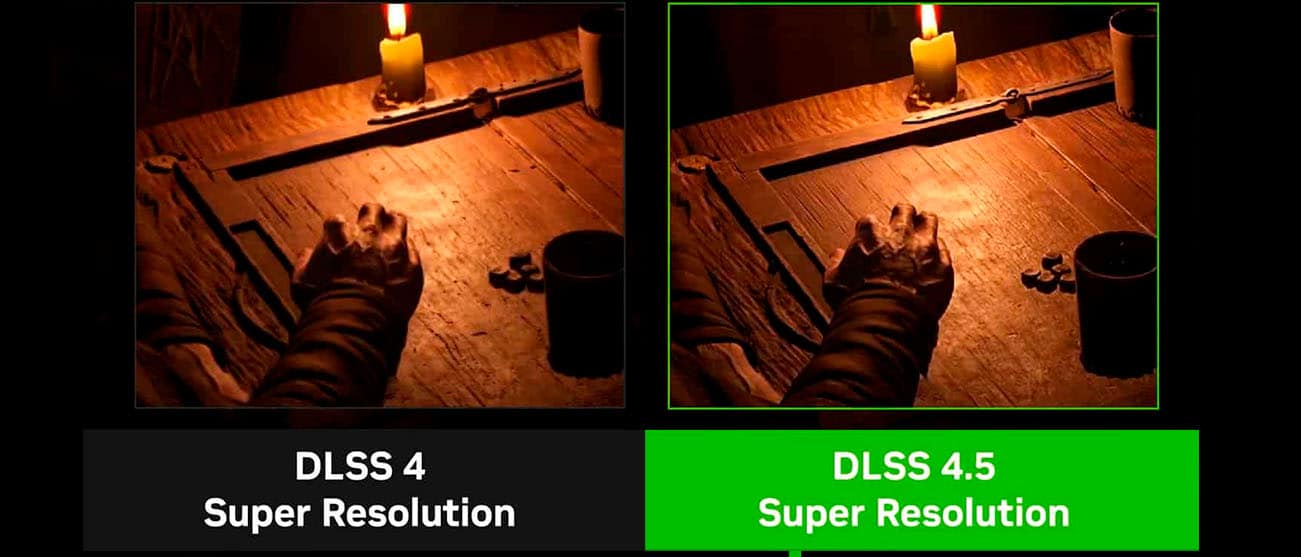

DLSS 4.5 significantly improves image quality using a heavier 2nd-gen Transformer model, with minimal impact (2–3%) on RTX 40/50 GPUs thanks to newer Tensor cores and FP8 support.

-

RTX 20 and 30 series lack FP8 and newer Tensor cores, causing major performance penalties when using DLSS 4.5.

-

Early tests show up to 20–24% performance loss and higher VRAM usage on older GPUs, making DLSS 4.5 costly for RTX 20/30 users.

With the release of the drivers that enable the new NVIDIA DLSS 4.5 Super Resolution, we can now see the performance cost of improving visual quality. If you’re unfamiliar with it, DLSS 4.5 introduces the new 2nd Generation Transformer model.

In short, this improves visual quality when you activate DLSS upscaling technology. And not only that, but it also reduces and even eliminates (in certain circumstances) artifacts such as ghosting, shimmering, and unstable edges. At the same time, it improves sharpness in motion, especially in areas where DLSS previously struggled, such as with dense vegetation, fine particles, or blur.

With this brief context, you should now know that all these improvements come at a price in terms of performance. This new model is heavier, and logically, the NVIDIA GeForce RTX 50 series, the most advanced models, are the ones that suffer the least in terms of performance from these improvements.

In practice, we’re talking about a performance loss of around 2 or 3% compared to the previous Transformer in the current-gen RTX 50 series GPUs. All of this comes at the cost of greater image definition and reduced or eliminated artifacts. Even in the RTX 40 series, the impact is small, and the reason is simply that they have better Tensor cores and FP8 hardware acceleration to increase inference performance.

DLSS 4.5 Faces Major Issues on Older RTX 20 & 30 GPUs

The problem with NVIDIA DLSS 4.5 Super Resolution arises when the 2nd Generation Transformer model is compared to GeForce RTX 30 and RTX 20 series graphics cards. The main reason for this is the lack of the FP8 format. This is compounded by a smaller number of Tensor cores, which are also older. As a result, the fixed cost of upscaling has a much greater impact on performance, and early tests clearly show noticeable performance drops.

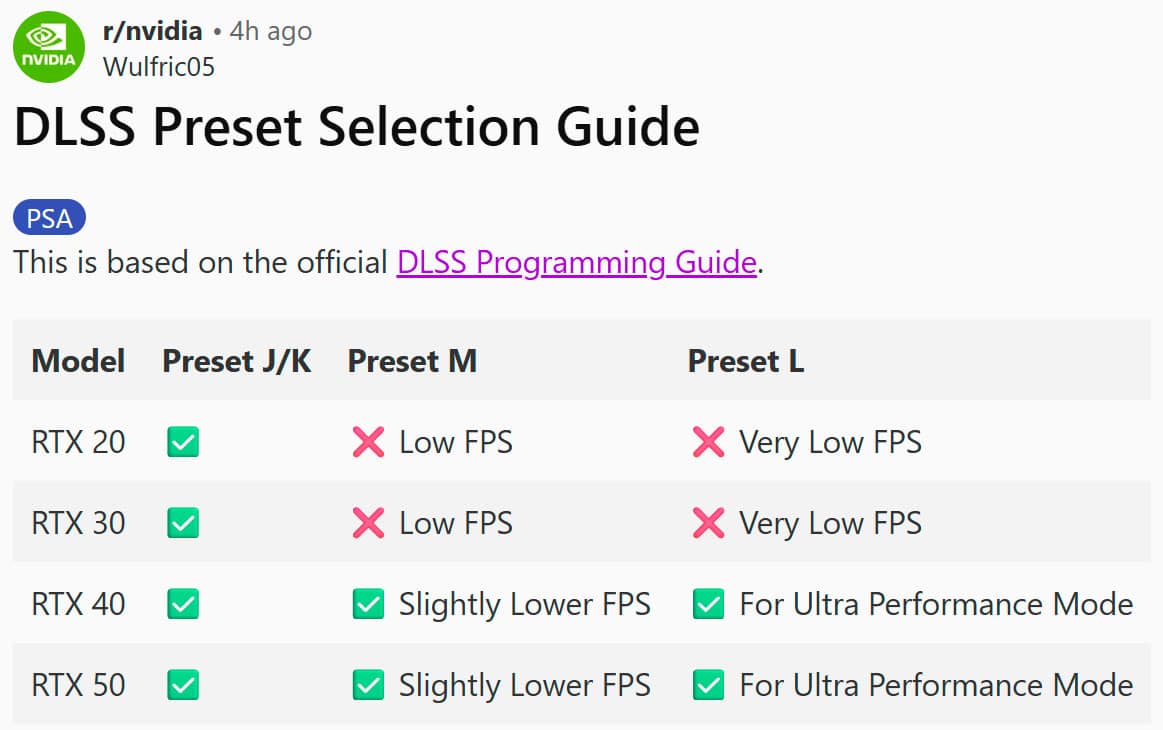

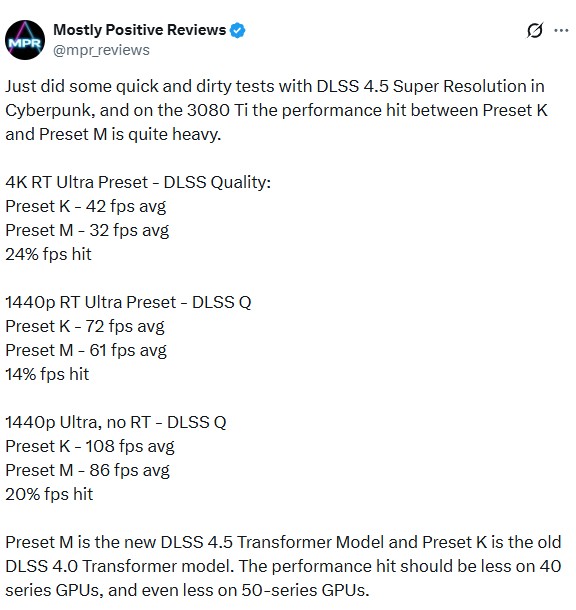

The performance tests provided by Mostly Positive Reviews (MPR) are devastating. First of all, to provide some context, the DLSS programming guide lists several presets that already indicate that the NVIDIA GeForce RTX 30 and 20 series are incompatible with them due to very low performance:

- Preset K: Default for DLAA, Quality and Balanced, with less overhead than the new presets.

- Model M: Optimized and recommended for DLSS Super Resolution Performance mode.

- Model L: Optimized and recommended for 4K DLSS Super Resolution Ultra Performance mode.

- Preset J: Close to K, with compromises (less ghosting in some cases, higher risk of flickering).

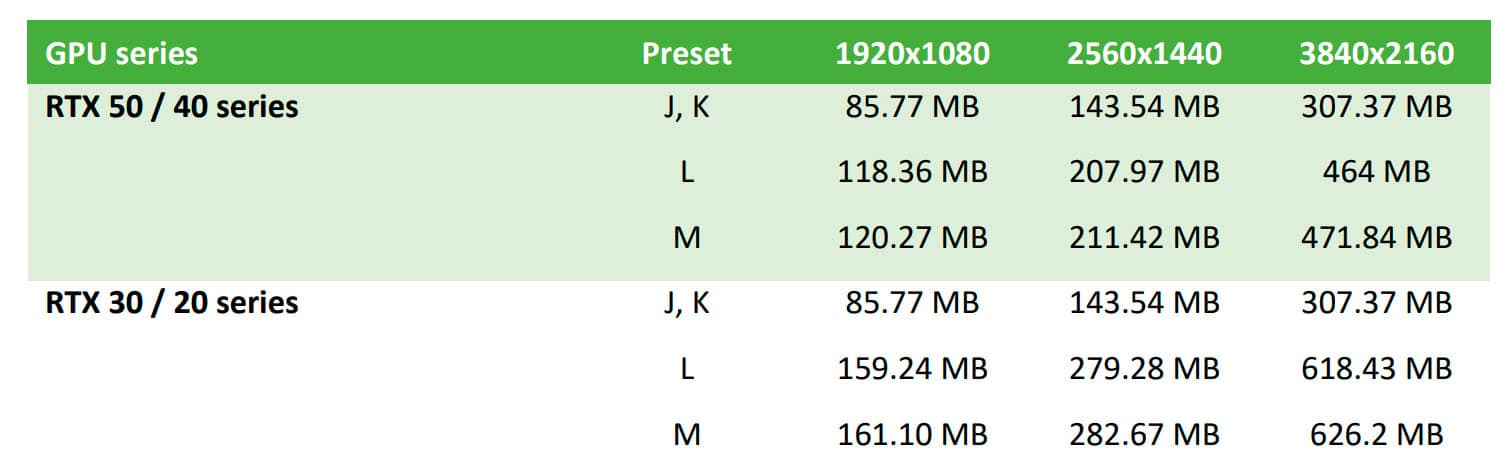

According to the table provided by NVIDIA, the GeForce RTX 30 and RTX 20 series cards can only access Presets J and K. These are presets that prioritize lower quality in exchange for higher performance. Therefore, MPR wanted to compare the performance of a GeForce RTX 3080 Ti with DLSS 4.5 Super Resolution in Cyberpunk 2077. To do this, they compared Presets M and K.

GeForce RTX 3080 Ti Sees Up to a 24% Performance Drop

At 4K resolution, with Ultra graphics settings and DLSS on Quality, Preset K offered an average performance of 42 FPS compared to 32 FPS for Preset M, representing a 24% performance impact with RTX 3080 Ti. At 1440p resolution, the performance impact was 14%. At 1440p without Ray Tracing, the impact was 20%. In The Last of Us Part II at 4K, the performance loss was 14%, from 154 to 135 FPS.

Then there’s another problem: DLSS 4.5 consumes more GPU memory. This means that GPUs with 8GB of VRAM, which were already limited or barely adequate for today’s gaming, would suffer from stuttering or performance drops. At 4K resolutions, we’re talking about more than double the additional VRAM usage on an RTX 30 or 20 series card to compensate for the limitations of an older technology.

A GeForce RTX 3060 went from 56 FPS without AA to 52 FPS with the K preset and 38 FPS with the L or M presets in Cyberpunk 2077. Therefore, this technology is costly for older graphics cards, but for modern ones, it barely makes a difference.

Thus, on average, the RTX 30 and 20 series lose about 20% of performance, compared to a maximum of 3% for the RTX 50 series. And of course, in the case of the RTX 50 series, it’s well worth it. There’s a very small performance loss in exchange for a massive improvement in image quality, according to Jacob Freeman, an expert in DLSS and RTX Remix at NVIDIA.

Thank you! Please share your positive feedback. 🔋

How could we improve this post? Please Help us. 😔

[Editor-in-Chief]

Sajjad Hussain is the Founder and Editor-in-Chief of Tech4Gamers.com. Apart from the Tech and Gaming scene, Sajjad is a Seasonal banker who has delivered multi-million dollar projects as an IT Project Manager and works as a freelancer to provide professional services to corporate giants and emerging startups in the IT space.

Majored in Computer Science

13+ years of Experience as a PC Hardware Reviewer.

8+ years of Experience as an IT Project Manager in the Corporate Sector.

Certified in Google IT Support Specialization.

Admin of PPG, the largest local Community of gamers with 130k+ members.

Sajjad is a passionate and knowledgeable individual with many skills and experience in the tech industry and the gaming community. He is committed to providing honest, in-depth product reviews and analysis and building and maintaining a strong gaming community.