- Nvidia’s RTX 5000 GPUs are slated to be launched at CES 2025, but they’re quite power-hungry according to a spec leak, courtesy of Seasonic.

- Team Green’s next-generation GPUs are rumoured to require up to 150W of additional power as opposed to the outgoing RTX 4000 GPUs.

- The RTX 5000 ‘Blackwell’ GPUs will all feature 16-pin connectors while the RTX 5090 is reported to use dual 12VHPWR sockets.

I know, I know.

You and I are both anxiously awaiting the launch of NVIDIA’s GeForce RTX 5000 Series GPUs.

However, reports suggest we’ve still got about a month to go as credible intel (no pun intended) has led me to believe that the successor to the RTX 4000 lineup will be revealed at the next edition of CES (Consumer Electronics Show) which will be held during the second week of January 2025.

Like always, CES is poised to be another blockbuster. Fans are gearing up with great anticipation for the successor to Nvidia’s RTX 4000 Series.

So what’s the bottom line?

RTX 5000: More Performance = More Power Consumption

Akin to the buildup for previous generational launches, credible rumours are claiming unprecedented performance gains of up to 70%.

On top of that, there’s even an RTX 5050 in the works, reportedly, which is surprising as Nvidia didn’t opt to launch a successor to the RTX 3050 when it launched its RTX 4000 lineup. But of course, there’s always a catch.

For the RTX 5000 Series, it’s the power consumption or TDPs (Thermal Design Process) of the GPUs themselves. With the exception of the aforementioned RTX 5050, every succeeding GPU to the RTX 4000 ‘Ada Lovelace’ lineup is expected to significantly up the ante with respect to power requirements.

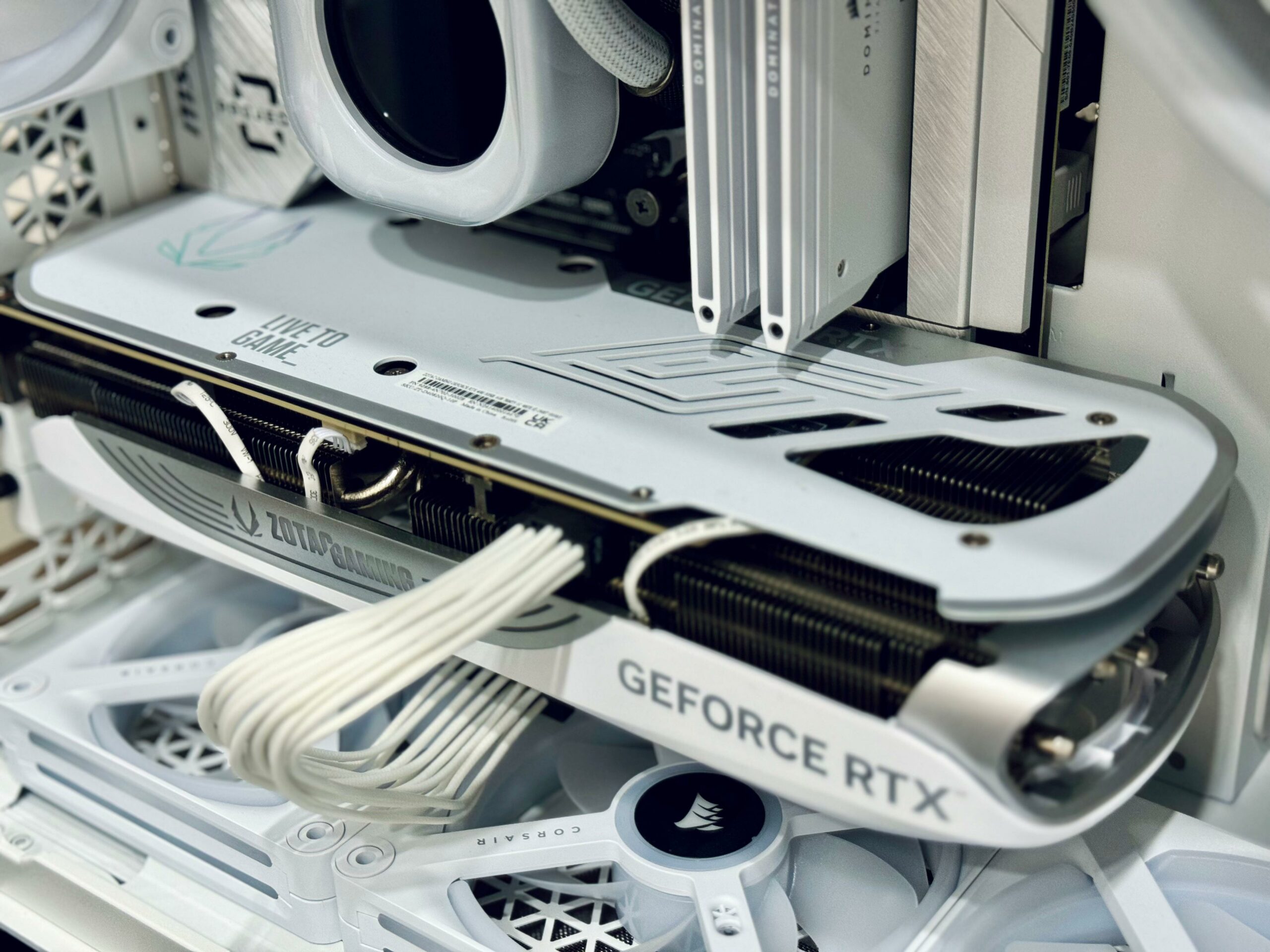

Understandably, increased power equates to increased thermal challenges. That being said, I’m here today NOT to debate what the best cooling solution for one of these RTX 5000 GPUs might be.

Instead, I’m here to discuss in detail and accordingly decide whether you’re going to be needing a new PSU for these next-generation, bleeding-edge GPUs.

RTX 5000: Let’s Discuss Power Delivery

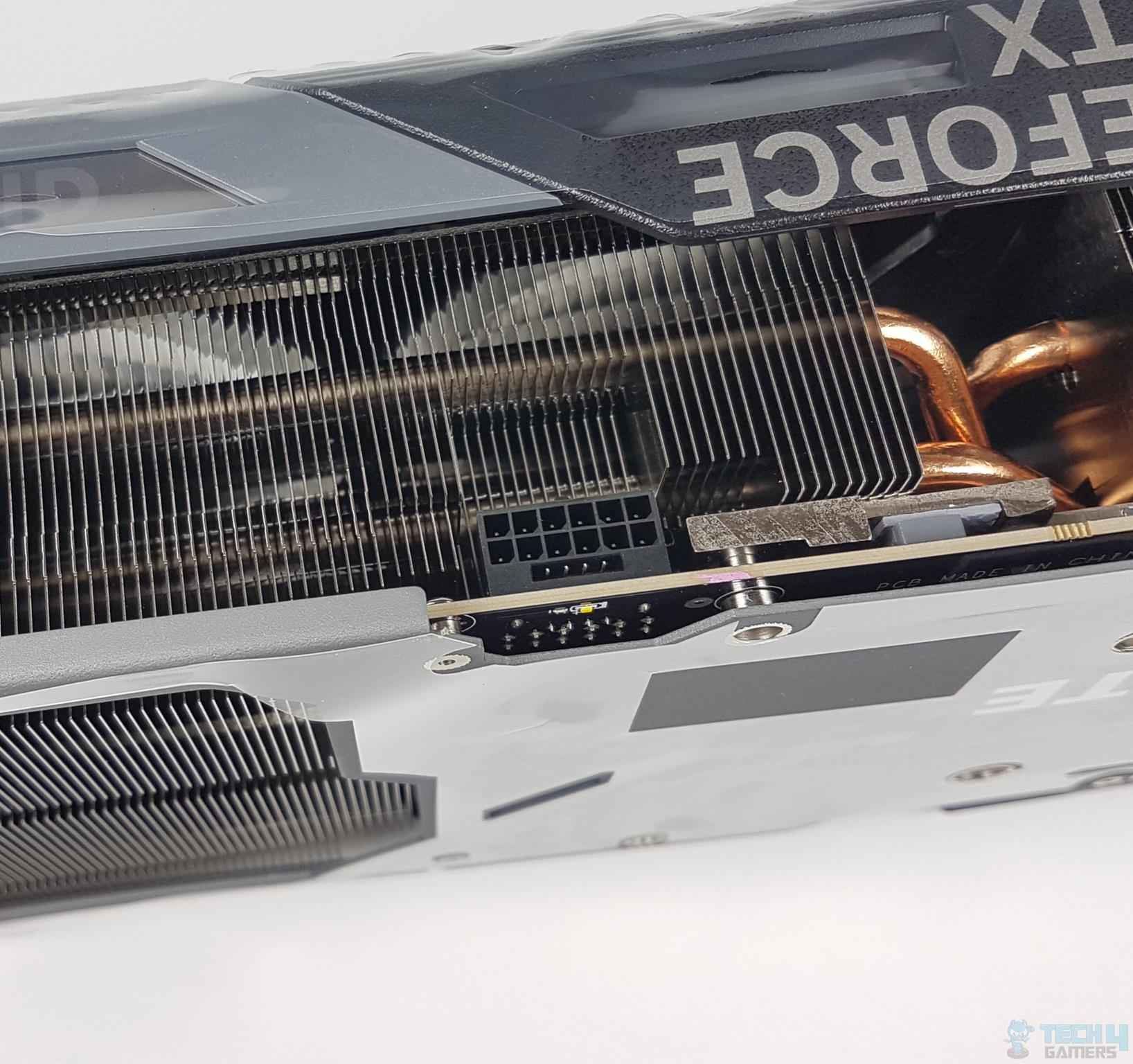

First off, all of the RTX 5000 GPUs codenamed “Blackwell” will be getting 16-pin connectors in order to keep up with the latest generation GPUs’ power-hungry appetite.

Thanks to an unintentional slip-up from renowned PSU manufacturer, Seasonic, we can make a credible claim as to what the power requirements for each of Nvidia’s Blackwell GPUs will be.

For starters, the RTX 5050 is rated at a very reasonable 100W, which would be a 30W decrease in comparison to the RTX 3050.

Moving on, the RTX 5060 is slated for a 170W TDP, i.e., a 55-watt increase over its outgoing predecessor, i.e., the RTX 4060.

Furthermore, the RTX 5070 will apparently be consuming 220W, which will equate to a 20W increment over the last generation RTX 4070.

Moreover, the RTX 5080 is gauged to gulp 400W of power, an 80W surplus in contrast to the RTX 4080.

Finally, the flagship RTX 5090 GPU is set to break all records and champion the hierarchy at a financially electrifying TDP of 600W, which would equate to a 150W uptick as opposed to the current flagship GPU from Nvidia, i.e., the RTX 4090.

Evidently, power comes at the cost of power.

RTX 5000: Should You Spring For A New PSU?

Well, one thing’s for sure. All the new Blackwell GPUs will be featuring 16-pin connectors, even the RTX 5050.

What’s more, experts are claiming that the flagship RTX 5090 GPU might even boast dual 12VHPWR sockets out of the box.

Keeping all of the data mentioned above in mind, it’s safe to assume that unless you’re springing for an RTX 5050, you’d be well-advised to upgrade your PSU to ready your gaming rig for the latest and greatest GPU tech from Nvidia.

It’s also worth noting that while the RTX 5070 will only sip through an additional 20W of power compared to its predecessor, its younger sibling, the RTX 5060 will consume 55W more than the RTX 4060.

That’s something you’ll want to keep in mind if you’re upgrading directly from an RTX 4060 to RTX 5060, RTX 4070 to RTX 5070, and so on and so forth.

Last but not least, even if you’re remotely considering the RTX 5080 or 5090, your PSU should have been upgraded by yesterday, there’s just no question about it.

Thank you! Please share your positive feedback. 🔋

How could we improve this post? Please Help us. 😔

[Wiki Editor]

Ali Rashid Khan is an avid gamer, hardware enthusiast, photographer, and devoted litterateur with a period of experience spanning more than 14 years. Sporting a specialization with regards to the latest tech in flagship phones, gaming laptops, and top-of-the-line PCs, Ali is known for consistently presenting the most detailed objective perspective on all types of gaming products, ranging from the Best Motherboards, CPU Coolers, RAM kits, GPUs, and PSUs amongst numerous other peripherals. When he’s not busy writing, you’ll find Ali meddling with mechanical keyboards, indulging in vehicular racing, or professionally competing worldwide with fellow mind-sport athletes in Scrabble. Currently speaking, Ali’s about to complete his Bachelor’s in Business Administration from Bahria University Karachi Campus.

Get In Touch: alirashid@tech4gamers.com