- Deepfakes are digitally altered images, videos, and voices that are usually manipulated or generated using advanced AI.

- It can lead to serious real world consequences, such as insinuating physical harm toward targeted individuals, causing mental distress, undermining the credibility and reputation of public figures, and more.

- To control this, Meta rolled out a dedicated label to distinguish deepfakes. Other tech companies are also taking measures to limit the adverse effects of deepfakes.

- Self-awareness, critical judgment, and rigid fact checks help identify deepfakes from the actual data.

We are living in a world where it is becoming harder to distinguish real from fake. With deepfake becoming more common and accessible, is it too late to control its real world impact?

What Are Deepfakes?

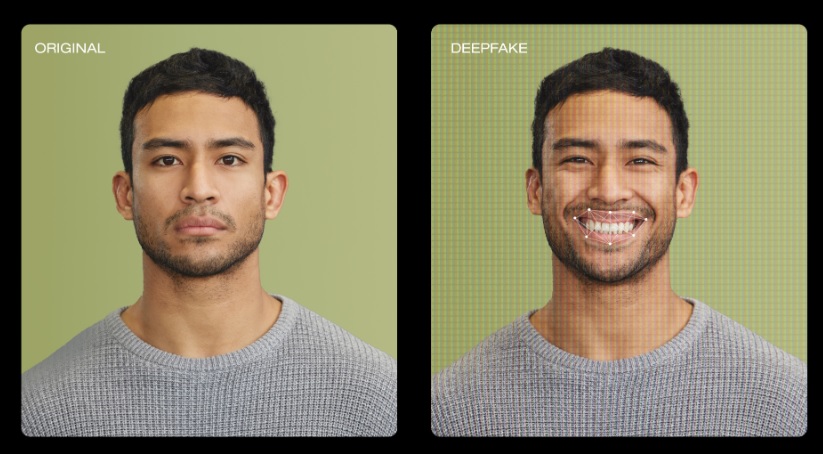

Deepfakes are AI-generated images, videos, and voice clips that resemble or mimic a real person’s face, voice, and appearance. It is portrayed as if a certain individual said or did something when, in reality, it never happened. As a result, they are one of the major techniques for spreading misinformation among the public (Vaccari & Chadwick, 2020).

With deepfakes, you can put anyone’s face over somebody else’s, create a fake recording using someone’s voice, and completely alter videos to change the context as per your wish. Consequently, they pose a huge threat as anyone can manipulate our images and videos and change the entire context. Here’s an example deepfake video of Microsoft’s co-founder, Bill Gates.

Dangers Of Deepfakes

As technology continues to improve, deepfake crimes are becoming more and more common. Celebrities, politicians, influencers, and even ordinary people are prone to be victims of deepfakes. It can be a weapon used to discredit someone’s character or position, undermine the credibility of an influential figure, mentally harm an individual, and negatively impact their public image.

They can even be used to insinuate masses against individuals, posing a serious danger to their safety. Furthermore, deepfake images and videos are generated to scam and blackmail people into paying hefty ransoms.

Prominent figures like Barack Obama, Taylor Swift, and Donald Trump have already been targets of deepfake manipulation.

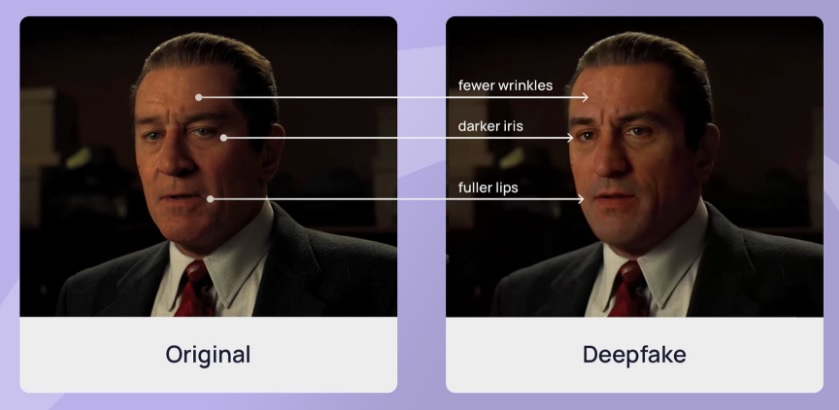

Deepfakes look and sound so real that it is hard to differentiate between the fake and real videos/images. You can manipulate any aspect of an existing picture with deepfake tech, but are there ways to spot one?

How To Spot A Deepfake?

Because deepfakes rely on AI and artificial synthesis, some clues can tell if an image or video is a deepfake. For instance, you can zoom into them and look closely for any misaligned edits, noticeable differences in colors, texture, etc.

For AI-generated deepfakes, many aspects hint toward its illegitimacy. Zero facial expressions, robotic voice, bad lip-syncing, physically impossible motions, poor object placement or design, and inconsistent or no eye blinking are some ways to detect a deepfake.

However, Mike Speirs of AI Consultancy Faculty told The Guardian, “There are all kinds of manual techniques to spot fake images, from misspelled words, to incongruously smooth or wrinkly skin. Hands are a classic one, and then eyes are also quite a good tell… And time is running out – the models are getting better and better.”

Safety Against Deepfakes

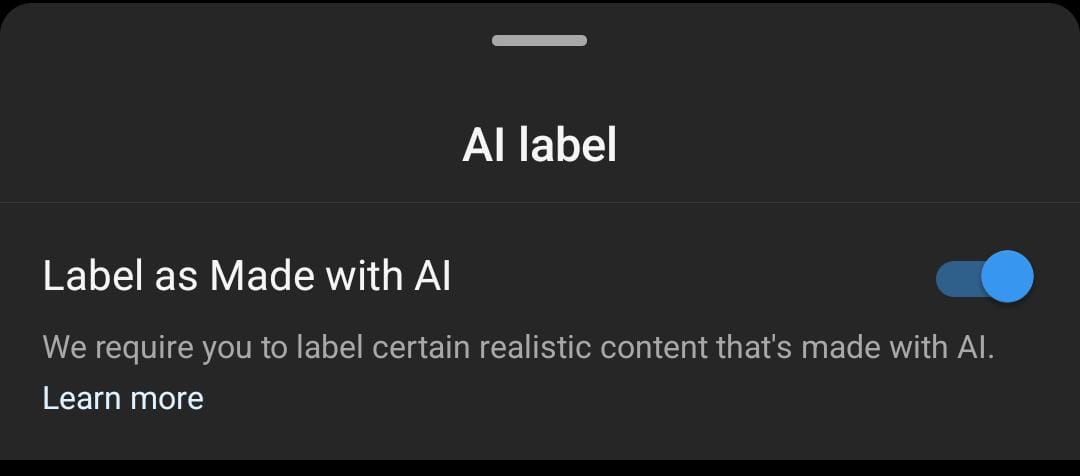

Prominent companies are now considering the dangers and misuse of deepfakes. Meta recently made an announcement instructing creators to label the images and videos generated using AI with the “Made With AI” label on Meta platforms. If not labeled, Meta will remove the posts from their platforms.

Earlier this year, 20 big tech firms, including Microsoft and Google, signed the Tech Accord to combat misinformation and political propaganda spread through AI deepfakes ahead of major elections.

Although much damage has been done, there is still time to take proactive measures to deal with this problem.

While companies are actively taking steps to counter the dangers of deepfakes, we should also educate ourselves on an individual level against this serious threat. Moreover, strict regulatory measures from authorities can help minimize the dangers of deepfakes.

Thank you! Please share your positive feedback. 🔋

How could we improve this post? Please Help us. 😔