Multiplayer games like Call of Duty can often become a breeding ground for hate speech and abusive language due to easy access to voice chats that allow players from different parts of the world to interact with each other.

This has resulted in a pressing need to address the issue of offensive communication in online gaming communities where instances of players resorting to abusive language over voice chats following a defeat are not uncommon.

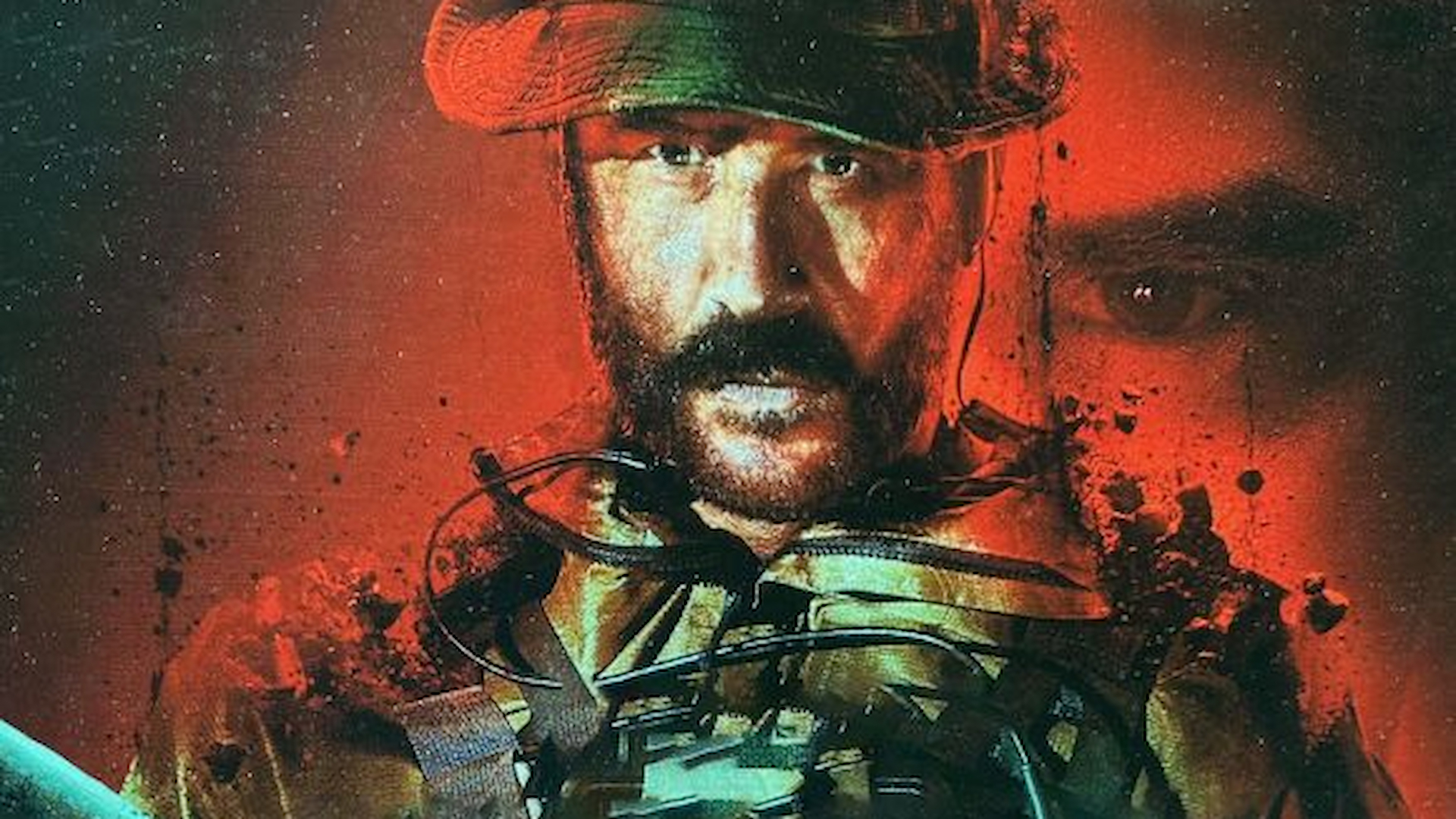

To combat such scenarios, Call of Duty is introducing a new AI tool, ToxMod, in its games.

Why it matters: Online multiplayer games have struggled in the past to regulate the audio-based hate speech and bullying between players.

BREAKING: Activision will add real time in-game voice chat moderation in Call of Duty. AI powered detection will work in-game to listen and report rule breaking issues automatically.

It’s live today in MWII and Warzone in NA and expands worldwide with MW3. Here’s the details: pic.twitter.com/rp6buXWkfK

— CharlieIntel (@charlieINTEL) August 30, 2023

ToxMod is an AI-based voice moderation tool that is made to regulate voice chats and aid Activision in identifying players violating the Call of Duty code of conduct.

The moderation will go live in North America for Warzone and the latest Call of Duty today. This launch will serve as a beta test, while the full release is expected worldwide on November 10 alongside Call of Duty: Modern Warfare 3.

ToxMod analyses conversations in real time and flagged voice chats are then sent to Activision. The gaming giant’s team then reviews such voice chats and takes appropriate action.

The CEO of Modulate has previously said that ToxMod goes beyond transcription as it takes emotions and volumes into context to differentiate between friendly and aggressive actions.

All of this results in more accurate evaluations.

It is worth noting that the tool will not report those making friendly banter in the spirit of remaining competitive, as these things are part of the game.

ToxMod is a larger part of Activision’s efforts to control toxicity and harmful behavior in online gaming environments. The gaming giant has already restricted over 1 million accounts for such behavior since last year.

Following the implementation of ToxMod, players can expect accelerated progress in Activision’s efforts.

Thank you! Please share your positive feedback. 🔋

How could we improve this post? Please Help us. 😔

[News Reporter]

Bawal is an MBBS student by day and a gaming journalist by night. He has been gaming since childhood, growing fond of the creativity and innovation of the industry. His career as a gaming journalist started one year ago, and his journey has allowed him to write reviews, previews, and features for various sites. Bawal has also been cited in reputed websites such as Screenrant, PCGamesN, WCCFTech, GamesRadar, and more.