- NVIDIA’s Generative Physical AI is a revolutionary technology that allows AI to control robots in real time.

- The AI is trained in simulated environments and with human demonstrations to make the robots perform complex tasks and move more naturally.

- This technology has the potential to automate many tasks currently requiring human labor.

The world of technology is rapidly evolving, reaching breakthroughs every few months. Among these, AI and reinforcement learning has been one of the most important advancements in human history.

While most people think of a digital program when they hear the word AI, companies have been working towards giving these models a physical body to work with. Among said companies, NVIDIA’s Generative Physical AI is revolutionary.

NVIDIA: Advancing Technology

NVIDIA is not a name that needs any introduction. It has made revolutions in technology all across the board, but most know them for their tech related to improving game experience.

From its graphic cards to drivers, it has made the lives of gamers throughout the world better. However, it also engages in other technologies, as seen by NVIDIA’s CUDA Cores helping with quantum computers.

The latest revolution from them is the Generative Physical AI, a way for physical models to make decisions in real time.

What Is NVIDIA’s Generative Physical AI?

One of the purposes of human advancements in science is to make our lives more convenient. This means to do away with anything that requires effort and has an alternative.

Anything requiring motor functions fits this criterion, as machines can perform tasks with more accuracy than humans. However, these machines usually have to be operated by a human, which isn’t effective.

The goal of Physical AI and other similar projects is to automate these things that have no reason to require human interaction, so humans can focus on things that do require their minds.

The Generative Physical AI is an AI that computes and calculates what actions to take in real time and autonomously applies the best course of action to a physical body.

How Does Physical AI Work?

If you have any interest in AI, chances are you already know what reinforcement learning is. In the simplest of terms, Reinforcement Learning trains AI using a trial-and-error process.

This can be done in various ways and works by rewarding the machine for getting a result closest to the desired one. This can then be paired with Neural Networks to further increase the effectiveness.

Simulated Environments

The Physical AI also uses virtual simulations for the first step of learning. This is important, as giving the program a physical body at the start can result in drastic equipment damage, and slow down the process.

There are also a limited number of physical machines. In a simulated environment, millions of generations and variations of the machine’s progress can be managed at once.

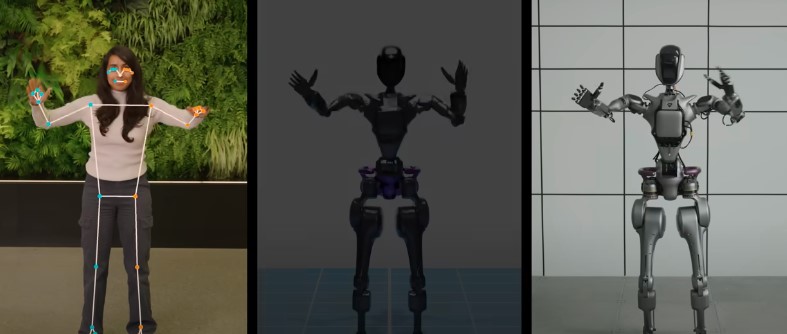

Human Sampling

NVIDIA also uses human sampling to make its Physical AI even stronger. They do it by showing the AI what humans would do in certain situations, giving them a chance to learn and try to replicate them.

This, unlike traditional Machine Learning, allows the AI to perform better in a more natural way. In a purely simulated environment, the intelligence can often exploit program glitches and get closer results that way. In a real-world environment, this can be extremely dangerous.

What To Expect From Physical AI?

NVIDIA has certainly made leaps in Machine Learning, but it’s nowhere near perfect enough even for testing, much less for commercial use. It might take years or even decades before we get to see its full use, but it still is a leap in technology.

Presently, robots using Physical AI have shown to be able to perform complicated tasks such as drumming or putting a thread to a needle without human help.

Physical AI has also been shown to improve the way these robots traverse and move, making them less likely to trip and fall. By learning from humans, they’ve learnt to balance themselves without any manual calculations.

If this continues to improve at the same pace, it won’t be a stretch to say most tasks requiring motor movement can be replaced by machines.

Transport, assembling, and lots of repetitive tasks could be given for machines to handle by themselves, with the Physical AI serving as a replacement for human checking.

Thank you! Please share your positive feedback. 🔋

How could we improve this post? Please Help us. 😔

Heya, I’m Asad (Irre) Kashif! I’ve been writing about anything and everything since as far back as I can remember. Professionally, I started writing five years ago, working both as a ghostwriter and writing under my own name. As a published author and a council member in Orpheus, my journey in the world of writing has been fulfilling and dynamic.

I still cherish the essays I wrote about my favorite PS2 games, and I’m thrilled to have transformed my passion for game journalism into a career. I’m a theory crafter for Genshin Impact (and now Wuthering Waves) and have a deep love for roguelites and roguelikes. While I prefer indie games for their distinct aesthetic and vibes, I do enjoy triple-A games occasionally. I’ve also been playing League since season 6, and I main Akali! I have a keen interest in discovering and playing more obscure games, as well as researching forgotten titles. Additionally, I am a front-end programmer who dabbles a bit in gamedev occasionally.